A Széchenyi István Egyetem Járműipari Kutatóközpontja izgalmas bemutatóval várta az érdeklődőket a Mesterséges Intelligencia Koalíció Digitális Jólét Program Kiállításán. A központ munkatársai élőben mutatták be az autonóm járművek környezetérzékelési látásmódjait.

A mesterséges intelligenciával működő, adatvezérelt megoldások számos területen és szinte minden iparágban újszerű hatékonyságnövelési lehetőségeket kínálnak. A Mesterséges Intelligencia Koalíció által rendezett szakmai nap és kiállítás éppen erre hívta fel a figyelmet.

A kiállításon megtekinthető volt a SZTAKI Rendszer és Irányításelméleti Kutatólaboratóriumának, valamint a győri Széchenyi István Egyetem Járműipari Kutatóközpontjának közös fejlesztésű önvezető autója. „A Nissan Leaf-alapokra épült autonóm jármű többféle szenzorral is felszerelt, ezek segítségével képes tájékozódni, akár a forgalomban is” – ismertette dr. Soumelidis Alexandros, a SZTAKI tudományos főmunkatársa.

Dr. Szauter Ferenc, a Járműipari Kutatóközpont vezetője elmondta, hogy a kiállításon az önvezető járművek területén belül a mesterséges intelligencia és gépi látás alapú környezetérzékelési módszerek bemutatására fektették a hangsúlyt.

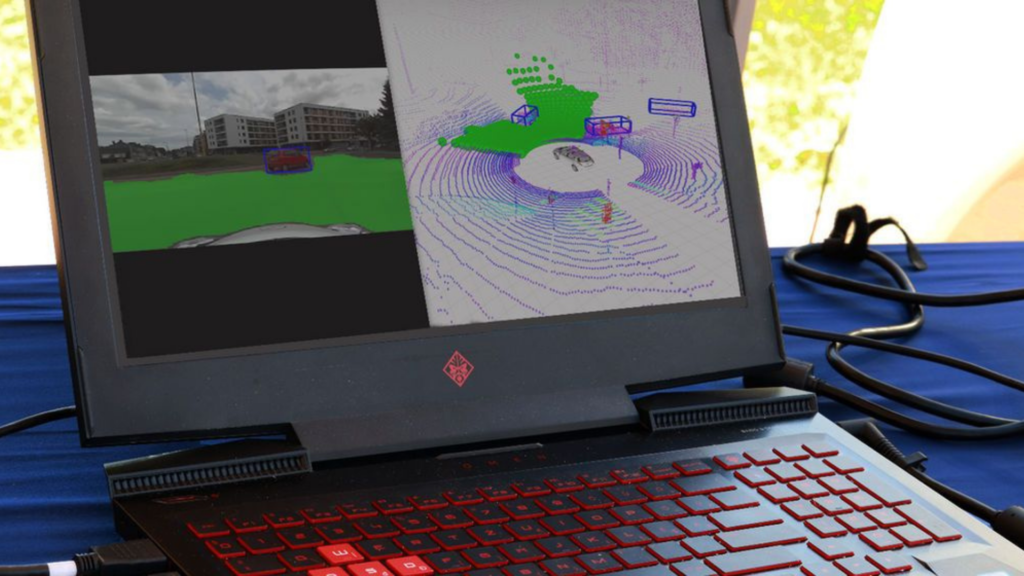

A kiállítás során a kutatóközpont interaktív bemutatóval készült, amelynek során a jármű érzékelte az előtte sétáló járókelőket, és egy monitoron élőben bemutatta, ahogy a jármű felismeri az emberi alakokat a kamera látóterében, illetve a LIDAR-adatok alapján háromdimenziós térben is megjeleníti a felismert embereket.

„A környezet érzékeléséhez olyan módszert dolgoztunk ki, amely képes a kamera és a LIDAR fúziójára, azaz a két szenzor egy rendszerként használható” – hangsúlyozta Markó Norbert, a Járműipari Kutatóközpont kutatómérnöke.

„A jármű a kamera felvétele alapján, az emberi látáshoz hasonlóan képes realizálni, hogy milyen objektumok találhatók a látótérben. Jelenleg a vezethető sáv felület, a járókelők és közlekedési eszközök – mint például a személygépjárművek, a tehergépjárművek vagy akár kerékpárok – felismerésére fókuszálunk” – folytatta Hollósi János, a kutatóközpont kutatómérnöke.

„A gépi látás alapú mesterséges intelligencia rendszer képes megállapítani, hogy a kamera által látott kép mely képpontjai tartoznak az egyes objektumtípusokhoz” – egészítette ki dr. Horváth Ernő, a kutatóközpont kutatási csoportvezetője.

A felismert objektumok és azok egyes pontjainak távolságinformációja alapján a szoftverrendszer létrehozza a jármű környezetének háromdimenziós térbeli mását, ami a biztonságos önvezetéshez szükséges. Ezek az információk olyan formában állnak rendelkezésre, hogy az további algoritmusokkal például útvonaltervezéshez, akadályelkerüléshez értelmezhető és feldolgozható legyen. Az emberi látással ellentétben a kamera felvétele alapján csak a kamera síkján határozzák meg az egyes objektumok helyzetét: tehát csak azt, hogy az objektum a képen hol van, de azt nem, hogy a valós térben hol helyezkedik el.

Kőrös Péter, a Széchenyi István Egyetem Autonóm Kompetenciaközpontjának szakmai vezetője tájékoztatása alapján jelenleg nem az a cél, hogy kamerakép alapján centiméterpontosan megállapítsák a látott objektum távolságát a járműhöz képest. Erre a feladatra az emberi látás sem képes, csak egy olyan becslést ad, ami elegendő ahhoz, hogy az ember viszonylagos biztonsággal tudjon közlekedni. A jármű esetében nem a kamera, hanem a használt LIDAR-szenzorok centiméteres pontossággal képesek meghatározni a jármű környezetében található objektumok távolságát. A LIDAR egy úgynevezett 3D pontfelhőt generál, ahol a jármű környezete egy adott sűrűségben elhelyezkedő térbeli pontok formájában képeződik le.